AI Presentation Tools Compared: Can They Actually Build Slides That Teach?

A hands-on, no-nonsense review of Genspark, Gamma, Presentations.AI, and Manus for creators who care more about clarity than just looking impressive.

I'm knee-deep in creating an AI curriculum for beginners, which means I need to build a lot of slides. Training materials, workshop decks, and reference guides are the kind of content that needs to teach people something, not just look impressive in a screenshot.

Since I'm already experimenting with AI tools for everything else in my work, this felt like the perfect test case. Could these presentation tools handle the structural heavy lifting while I focused on making the content actually useful for learning?

I tested four tools with their free tiers, Genspark AI Slides, Gamma, Presentations.AI, and Manu, to see what they can deliver. My conclusion? They're not great, and they're definitely not ready to replace your current workflow. But they saved me hours in ways I didn't expect.

How I Approached This

I kept it systematic (product manager brain, can't help it):

Content research: Used Perplexity and Grok to gather material on "Prompt Engineering Best Practices"

Side note: Grok is surprisingly good at surfacing what the X community is actually talking about; Perplexity handles the broader research better

Outline creation: Fed everything to Gemini for structuring

Gemini really shines at taking messy information and organizing it logically

Generation testing: Pasted the outline into each tool (3-5 minutes per tool)

Reality check: Evaluated what they actually produced vs. what I needed

Export testing: Because what's the point if you can't get your slides out cleanly?

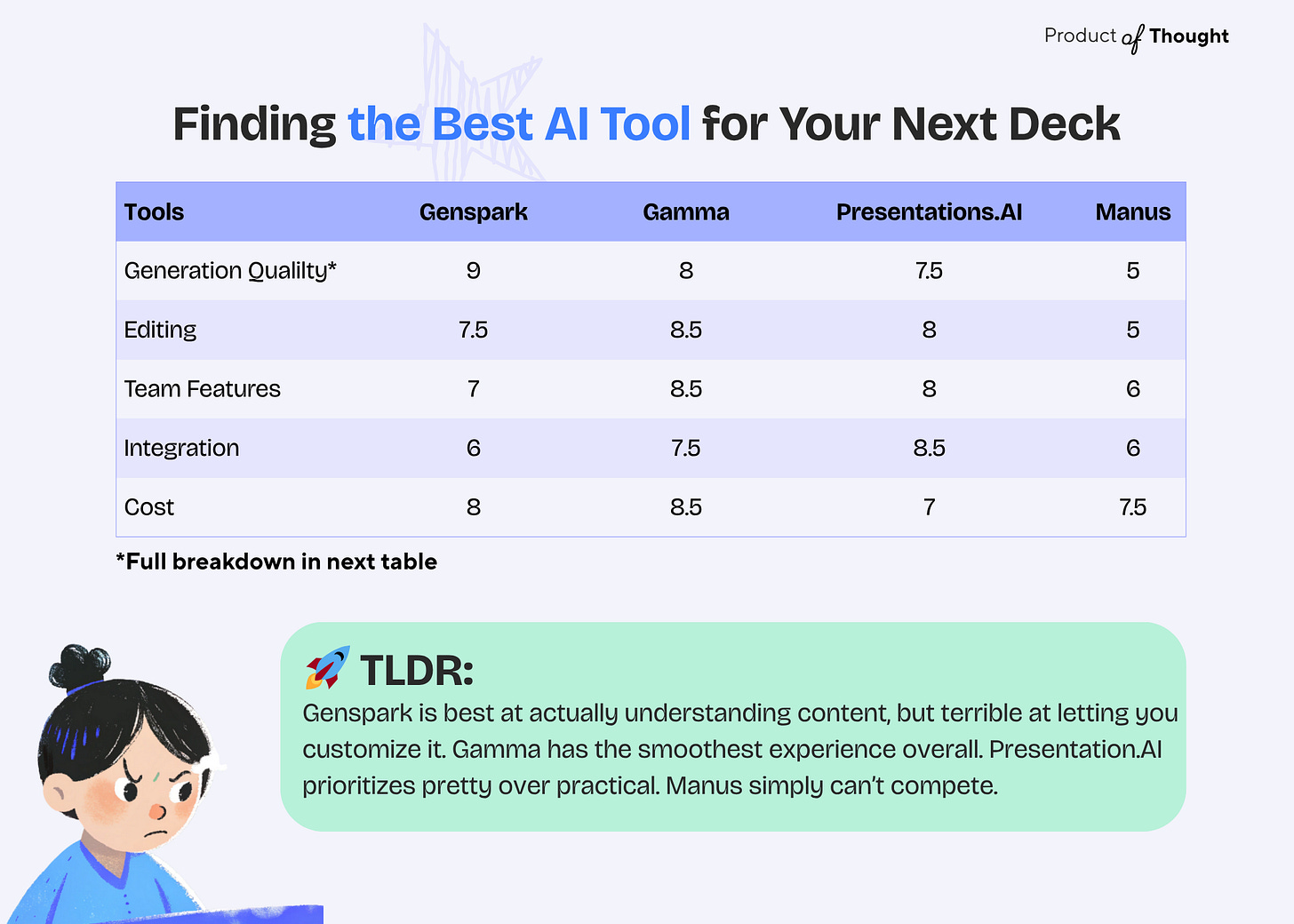

What I Actually Cared About

I weighted my evaluation based on what matters for educational content:

Generation Quality: Does this make sense? Will someone understand the content?

Editing Experience: Can I fix what needs fixing without wanting to quit?

Team Features: Does it work for collaboration?

Integration: Will it export without breaking everything?

Cost: Is the free version actually usable?

The Results: Reality Check Time

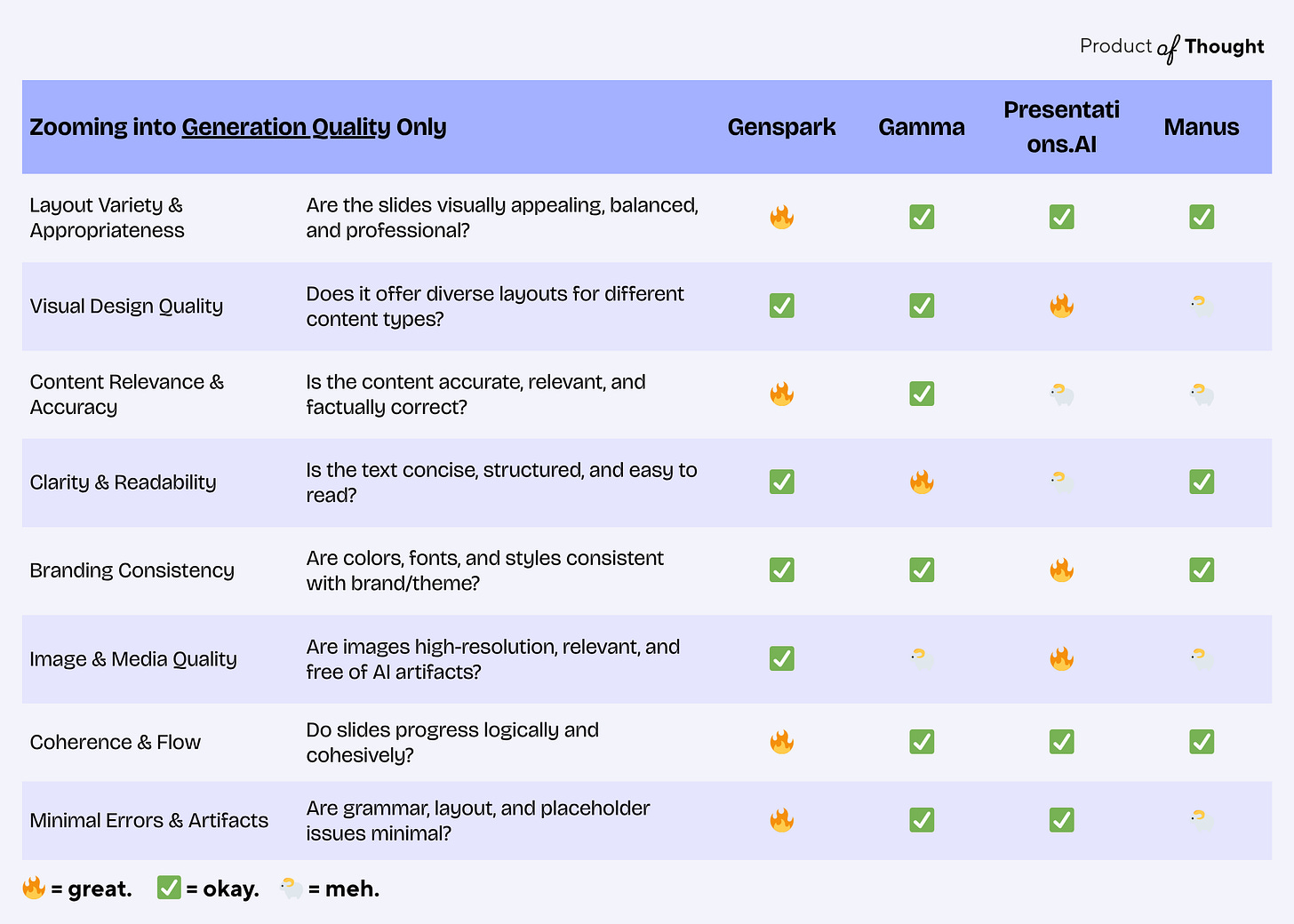

And here’s zooming into generation quality alone:

The short version:

Genspark: Best at actually understanding content, terrible at letting you customize it.

Gamma: Smoothest experience overall, but the content is crap

Presentation.AI: Prioritizes pretty over practical

Manus: Can’t even compare…

What Each Tool Actually Does

Genspark: The Deck Creator for Solopreneur

This tool produces the most solid content structure I've encountered. It's research-backed, logically sequenced, and reads like someone who actually understands the subject matter wrote it. The visual approach is refreshingly restrained, with mostly clean icons and diagrams that serve a purpose.

Here's what's interesting: Genspark doesn't ask what colors you want or what your brand looks like. It just makes decisions about what will work best for your content type. This is both brilliant and infuriating. Their choices were usually better than what I would have picked, but when I wanted to adjust something, there was no easy way to do it.

Want to change your color scheme? You’ll need to click through every single text box, background, and icon individually. The editing experience is truly painful. Want to collaborate with others? Doesn’t seem to have that option.

Gamma: The Slide Maker for Teams

Gamma wins on user experience. Everything feels intuitive, the collaboration features work, and you can make changes without fighting the interface. It generates visually appealing slides quickly.

But there's a trade-off: sometimes the focus on making things look good means the content gets diluted. You'll find beautiful graphics that don't add to the message. They're just there to make the slide feel "complete”, aka it’s a lot of fluff without substance.

Presentations.AI had good branding tools and felt familiar (like Google Slides), but the content quality was inconsistent. Information would drift between slides or end up in weird places.

Manus just wasn't ready. Slow, generic, messy. Felt like an early prototype.

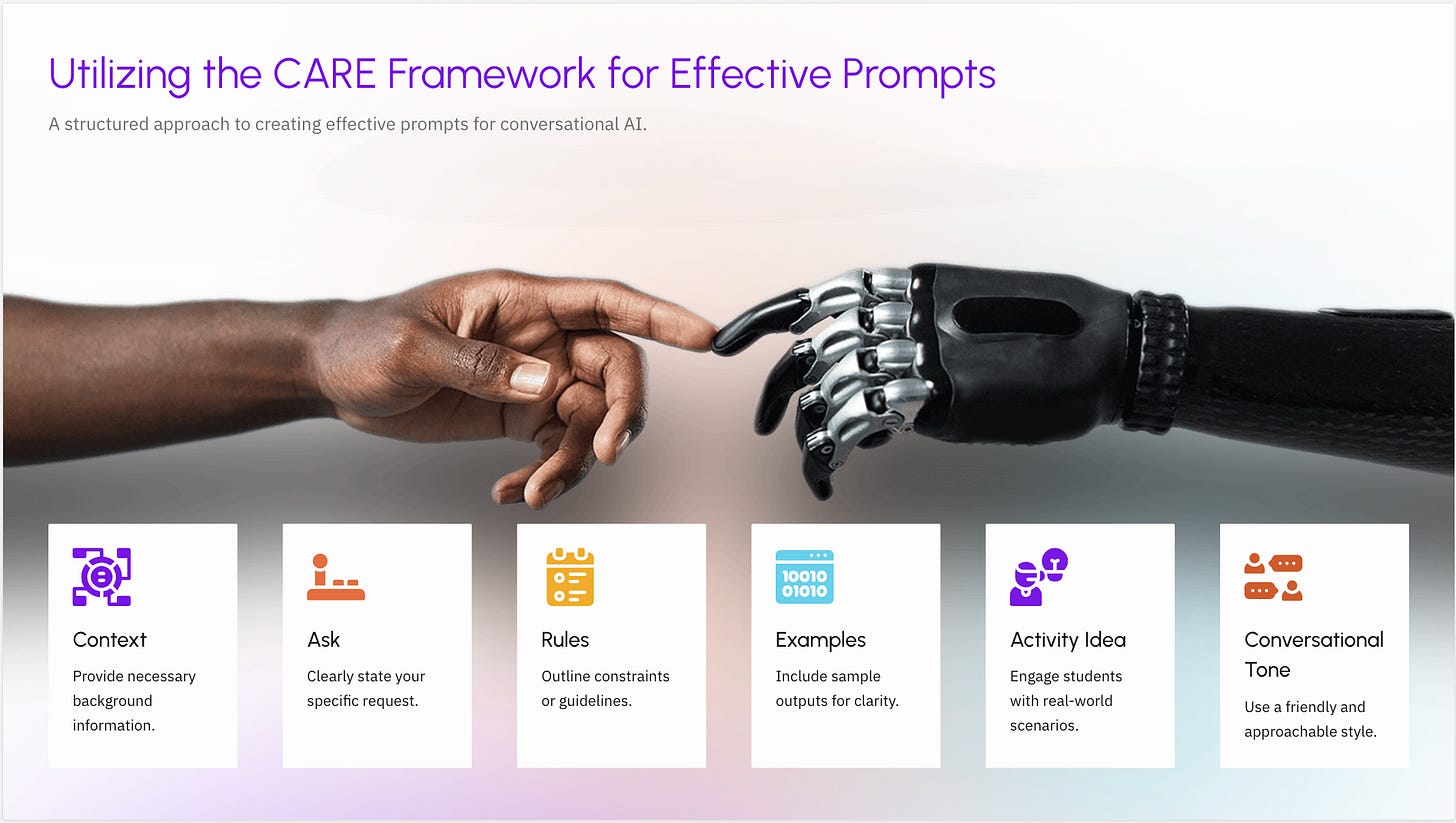

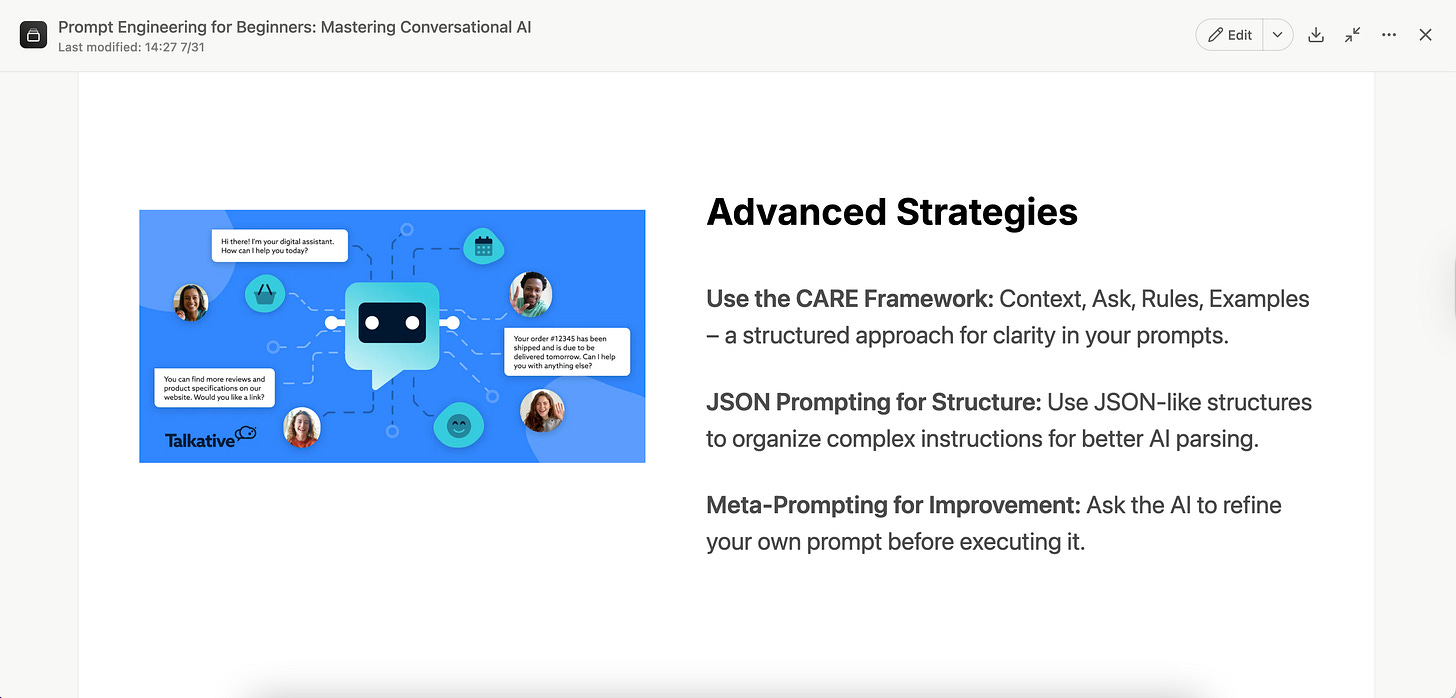

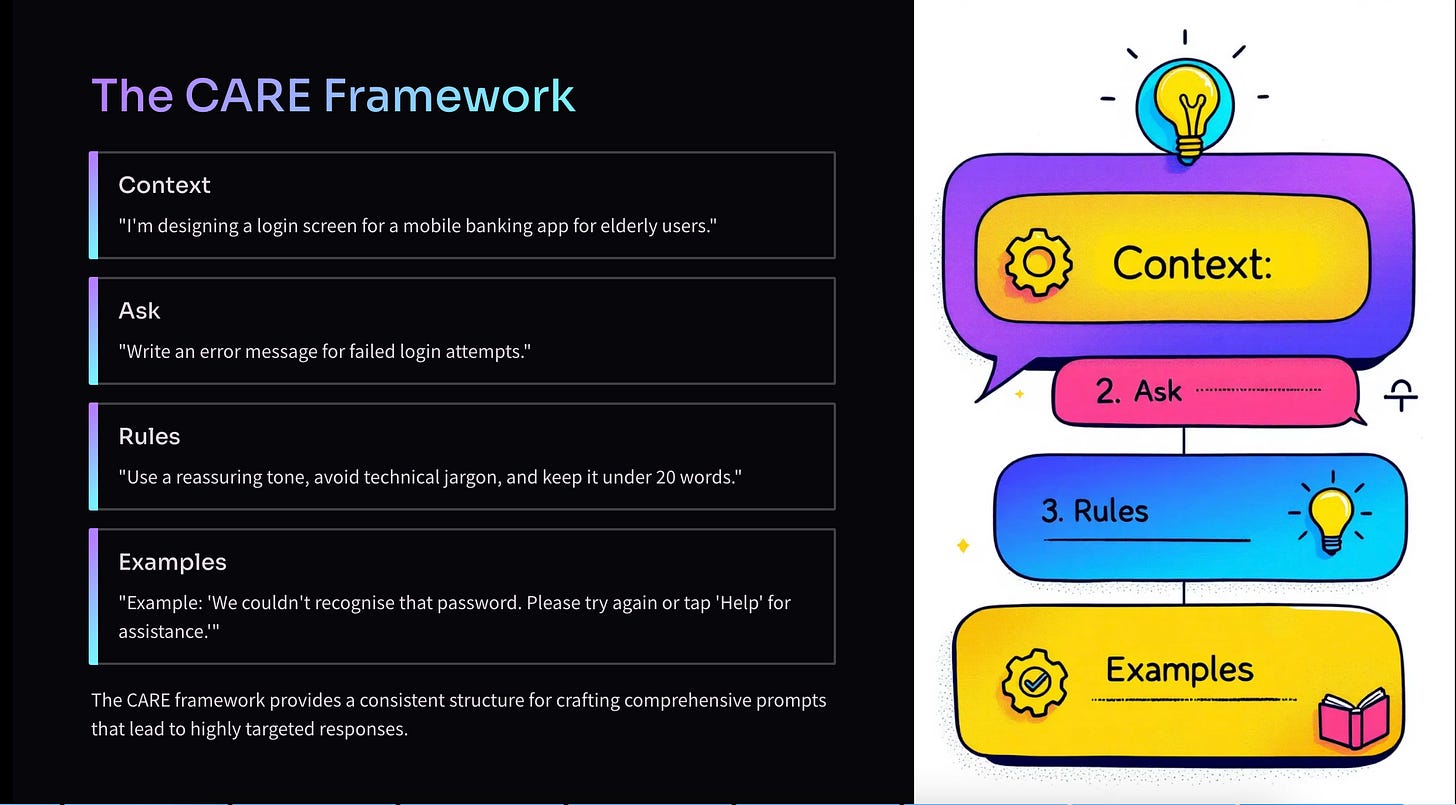

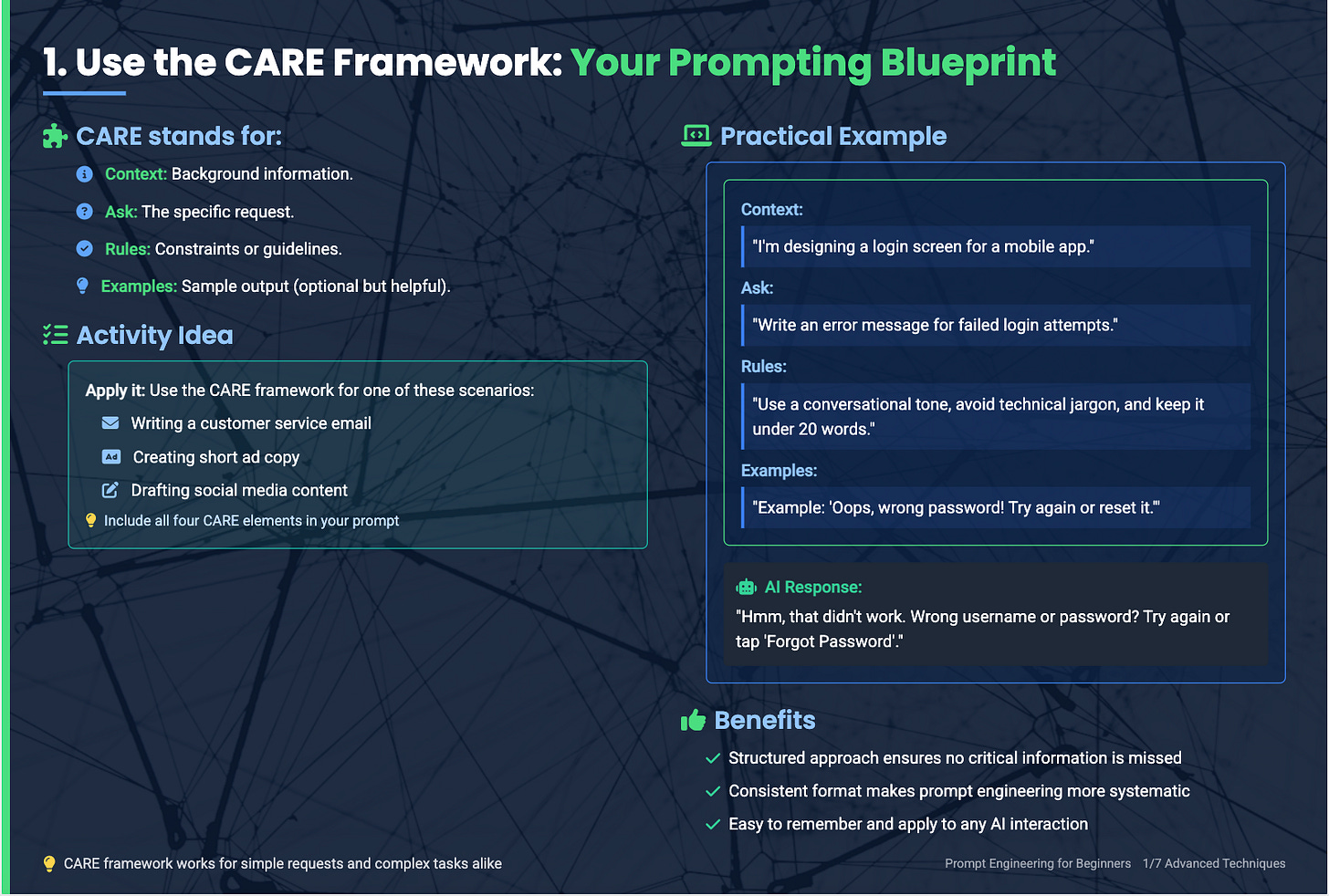

Just look at the examples below to see how each tool illustrates the “CARE framework” for prompt engineering. At first glance, Presentation.AI’s slide appears impressive, but upon closer look, it added incorrect content in the slide. Manus removed some of the content I provided and added other content on the same slide, which undermined my entire point. Gamma added an image from the Web that looks completely off-brand. Only the slide created by Genspark.ai is acceptable (a bit wordy, but at least acceptable).

The Plot Twist: I Didn't Use Any of These Slides

After all this testing, I ended up building my final deck in Canva (boo…). None of these tools produced slides I could use as-is.

However, I didn't start from scratch. Genspark's content structure became the foundation of my entire presentation. The way it organized complex concepts, the logical flow between topics, and the key points it identified have shaped my final slides.

So while these tools failed at their stated purpose (making finished slides), they succeeded at something more valuable: giving me a solid framework to build on and eliminating the "staring at a blank page" problem.

What's Missing

The core issue isn't that these tools are imperfect, but they seem to solve for the wrong problem. They're optimized for visual appeal, when what educational content or any type of presentation deck needs is clarity and logic.

What would be useful if these tools could:

Create graphics that reinforce the concept, not just fill white space

Understand that a training slide needs different treatment than a sales pitch

Export with clean formatting that survives the journey to PowerPoint or Google Slides

Design slides focusing on the content, asking questions like "what does the learner need to understand?" rather than "how can we make this look impressive?"

The Bigger Picture

These tools represent where we are with AI right now: incredibly good at generating starting points, not great at delivering finished products.

Delivering a great deck isn't all about creating impressive images and designs. It's about structuring complex information with texts and impactful visuals so your key messages can be delivered effectively to your intended audience.

And if AI can handle that foundational work, I can spend my time on perfecting the act of presenting, i.e., pacing, interactions, and follow-up questions, etc.

Where This Leaves Me

AI presentation tools aren't revolutionary, and they're not going to replace your current process anytime soon. But they've carved out an interesting niche as research assistants and structure generators that happen to output slides.

If you're building educational content or anything where substance matters more than style, they might be worth experimenting with—not as complete solutions, but as sophisticated first drafts.

Just don't expect to use what they create directly. Think of them as really capable research assistants who can get you 50% of the way there.

Have you experimented with AI tools for creating slides? I'm curious about your experiences & learnings if you’ve tried any other tools. Please share!

Next, I’m rolling up my sleeves for a deep dive into Microsoft Copilot 365 and Google Gemini—two contenders in the AI productivity ecosystem. Both promise frictionless integration with core work tools, but do they deliver good presentation slides?

Enjoy this rundown of these kinds of tools.

Appreciate the comparison and screen recordings! I actually built an entire deck in Gamma and it was up to 85% successful then I finished everything else in a couple of hours. I was actually really surprised since I thought I would have to make so many changes. Obviously it depends on standards and how complex your slides need to be.